Theory: Distribution Trees and Reverse Path Forwarding

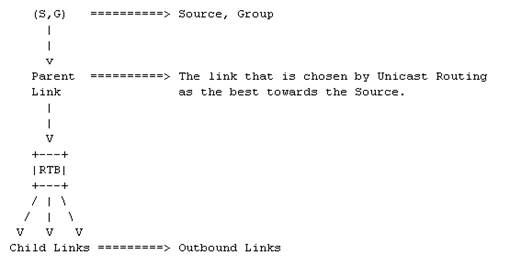

Source Distribution Tree:

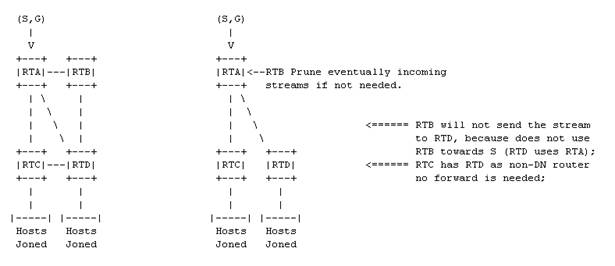

A Source sends a stream intended for a Group G (S,G). Derived based on the location of the specific Source S (using normal unicast routing and the Reverse Path Forward paradigm), a source tree is derived. Using RPF paradigm each routers verify, for a specific Source, which is the Parent Link to the Source (the best towards the Source as derived by the unicast routing table). Only streaming received on this link is accepted, the rest is dropped (avoiding looping traffic). Further each router defines which of its neighbors use the local router as the best path towards the Source (Downstream Routers, haveing the link to the local router as their Parent Link): those routers will (potentially) need the stream from the local router. Non Downstream Routers are supposed to get the stream from their own Parent Link which does not cross the local router. Pruning mechanism can be applied by routers that, even selected because in the shortest reverse path, do not need the stream (no IGMP joiners on any connected LAN).

Both PIM Dense Mode (which does not implement route exchange, but trusts the unicast protocol, whatever it is) and DVMRP (full routing protocol, no need for a unicast routing table) use Source Distribution Trees.

(1). Define the Parent Link for a Multicast Group as sent by the Source. If packets from that Source arrive from a non Parent Link, discard them (loop avoidance).

(2). Define Downstream Neighbors. A DN is a neighbor which has the local router in the best path towards the source.

(3). If the (S,G) receiving link is the Parent Link, then it forward packets to all Child Links towards downstream neighbors. Do not forward the stream to non-DN routers (*).

(*). Pruning Messages are implemented in PIM and DVMRP for optimization (that is if a router receives a (S,G) stream from a non Parent-Link, as in (1).

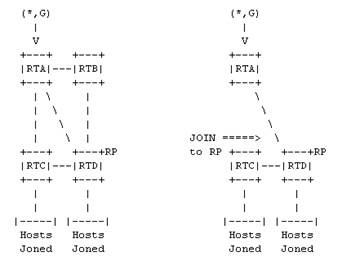

Shared Distribution Tree:

Source Tree assure the best path but are prone of overhead and potentially spread traffic (at least in the initial stage) to many parts of the network where traffic is not needed. A way of saving resources is to act “as if” no host desires the stream. Only when routers do need the stream, because hosts on one or more of the downstream LAN have explicitly joined a Group using IGMP, upon sending a joining message to a “registrator” multicast router (Rendezvous Point), traffic is forwarded to them. Normally the RP not only registers the joining but also forwards stream traffic. The location of the Source is not relevant for routers using a Rendezvous Point.

Only PIM Sparse Mode uses the Shared Distribution Tree model.

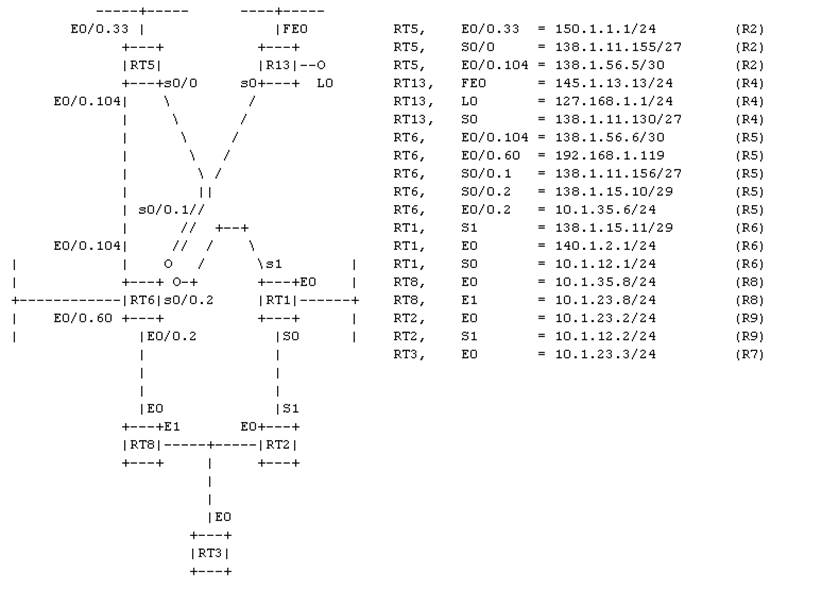

Multicast LAB Environment used

Note for setting up the LAB:

– Telnet must be done from RT5 (R2, 2610a) to all hosts.

– Switch must also be reconfigured.

– Using VLC to generate multicast stream:

– cd C:\Program Files\VideoLAN\VLC

– vlc “C:\Documents and Settings\Fabbri\My Documents\My Music\MP3” –sout udp://239.255.1.1 –ttl 12

– Using VLC to generate multicast stream:

– From IP Range = 192.168.1.1 / 192.168.1.X

– To IP Address = 239.1.1.1

PIM Dense Mode

PIM Dense Mode uses the Source Distribution Tree model and operates on a “flood and prune” principle. Multicast packets from the Source are flooded to all areas of a PIM-DM network using the RFP rule. PIM routers that receive multicast packets and have no directly connected multicast group members or PIM neighbors send a PRUNE message back up the source-based distribution tree toward the source of the packets. As a result, subsequent multicast packets are not flooded to pruned branches of the distribution tree (note that if a prune and is received from a child interface where more downstream router are reachable, meaning another router still needs the stream, the upstream router overrides the prune and sends a JOIN OVERRIDE message to the router that sent the prune message).

However, the PRUNED STATE in PIM-DM has a timer and TIMES OUT approximately every [3 minutes]. The entire PIM-DM network is then REFLOODED with multicast packets and new prune messages. This re-flooding of unwanted traffic throughout the PIM-DM network consumes network bandwidth and make PIM-DM not scalable in large absolute terms (note: this effect can be reduced if all routers support use of state-refreshing packets, which work as a sort of keepalive and keep the prune state from timing out).

Further if several multicast routers have a common downstream LAN segment towards common downstream routers only the best upstream router (according to its unicast metric towards the Source) is selected (those routers use “ASSERT” messages to perform best forwarder election). Moreover in case an earlier pruned router wants to join the Group again (and before the pruned state has timed out) it uses a GRAFT message towards the upstream neighbor.

Finally, in upstream, in case multiple routers are present on the same (LAN) interface, an election is also made for defining the DESIGNATED Router. The highest IP addresss is selected as the DR. The DR is the only router who will generate periodic IGMP queries to hosts.

PIM Dense Mode is very simple to configure, but, due to its push model, prone of inefficiency: the RFP rule only is used to avoid loops, and traffic is flooded by the default and on all downstream interfaces. Only explicit pruning, initiated by PIM routers, stops it. Each router keeps a specific source tree entry for all Sources and Groups combination (S,G), plus all related pruning messages, which moreover need to be re-issued periodically (every 3 minutes the pruning entries are flushed). Its requirements, in terms of memory and CPU processing, are generally high due to the fact that for each single Source S and Group G there has a dedicated (S,G) distribution tree, while all router, including routers not interested at all, need to learn it as well and prune it.

In this example we consider the source being behind RT5 eth0/0. There is a receiver behind RT6 eth0/0.60 and also a interface on RT3 which joined.

hostname RT5

!

ip multicast-routing <<<<<<< ALWAYS NEEDED to be able to process

! Multicasting in any form

interface Ethernet0/0

ip address 192.168.1.120 255.255.255.0

ip pim dense-mode <<<<<<< Also where the source is is needed

!

interface Ethernet0/0.33

encapsulation dot1Q 33

ip address 150.1.1.1 255.255.255.0

ip pim dense-mode

!

interface Ethernet0/0.104

encapsulation dot1Q 104

ip address 138.1.56.5 255.255.255.252

ip pim dense-mode

delay 20000 <<<<<< Influence the Unicast path (and consequently the RPF)

!

interface Serial0/0

ip address 138.1.11.155 255.255.255.224

ip pim dense-mode

encapsulation frame-relay

ip mroute-cache <<<<<<< May be removed by default

no arp frame-relay

frame-relay map ip 138.1.11.130 402

frame-relay map ip 138.1.11.156 402 broadcast <<<<<<< Needed to transport Multicast. If the

no frame-relay inverse-arp FR cloud does not support it, ip pim

! nbma mode is required (multicast is

router eigrp 1 encapsulated as unicast within the

passive-interface Ethernet0/0.33 Multipoint clound)

network 138.1.11.128 0.0.0.31

network 138.1.56.4 0.0.0.3

network 150.1.1.0 0.0.0.255

no auto-summary

eigrp stub connected

!

end

hostname RT6

!

ip multicast-routing

!

interface Ethernet0/0

ip address 192.168.1.119 255.255.255.0

ip access-group 101 in

!

interface Ethernet0/0.2

encapsulation dot1Q 2

ip address 10.1.35.6 255.255.255.0

ip pim dense-mode

!

interface Ethernet0/0.60

encapsulation dot1Q 60

ip address 10.1.60.6 255.255.255.0

ip pim dense-mode

!

interface Ethernet0/0.104

encapsulation dot1Q 104

ip address 138.1.56.6 255.255.255.252

ip pim dense-mode

delay 20000

!

interface Serial0/0

no ip address

encapsulation frame-relay

clockrate 2000000

ip mroute-cache

!

interface Serial0/0.1 multipoint

ip address 138.1.11.156 255.255.255.224

ip pim dense-mode

no ip split-horizon eigrp 1 <<<<<< Never forget it on a Hub

no arp frame-relay

frame-relay map ip 138.1.11.130 201 broadcast

frame-relay map ip 138.1.11.155 204 broadcast

no frame-relay inverse-arp

!

interface Serial0/0.2 point-to-point

ip address 138.1.15.10 255.255.255.248

ip pim dense-mode

no cdp enable

frame-relay interface-dlci 203 <<<<< On point-to-point there is no

! problem for Multicasting. No special

router eigrp 1 configuration is needed

passive-interface Ethernet0/0.60

network 10.1.35.0 0.0.0.255

network 10.1.60.0 0.0.0.255

network 138.1.11.128 0.0.0.31

network 138.1.15.8 0.0.0.7

network 138.1.56.4 0.0.0.3

no auto-summary

!

ip mroute 192.168.1.1 255.255.255.255 150.1.1.1 <<<<<<< Forcing RPF to accept it from

! this direction (actually the

access-list 1 deny 224.0.0.0 15.255.255.255 router has a better local path)

access-list 1 permit any This is one way to alter the

! unicast dependancy of PIM.

end

Another is to use DVRMP.

On RT5:

RT5#sh ip mcache <<<<<<< NB: mroute-cache may be removed by default

IP Multicast Fast-Switching Cache

(192.168.1.1/32, 239.255.1.1), Ethernet0/0, Last used: 00:00:00, MinMTU: 1500

Serial0/0 MAC Header: 64210800

RT5#sh ip pim neighbor

PIM Neighbor Table

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

138.1.56.6 Ethernet0/0.104 02:02:56/00:01:26 v2 1 / DR S <<<< Who is

138.1.11.156 Serial0/0 02:02:50/00:01:33 v2 1 / DR S the DR

145.1.13.13 Ethernet0/0 00:33:44/00:01:28 v2 1 / S

RT5#sh ip pim interface

Address Interface Ver/ Nbr Query DR DR

Mode Count Intvl Prior

138.1.56.5 Ethernet0/0.104 v2/D 1 30 1 138.1.56.6

138.1.11.155 Serial0/0 v2/D 1 30 1 138.1.11.156

192.168.1.120 Ethernet0/0 v2/D 1 30 1 192.168.1.120

RT5#sh ip mroute active

Active IP Multicast Sources – sending >= 4 kbps <<<<<<<<< Info about the Sources !!

Group: 239.255.1.1, (?)

Source: 192.168.1.1 (?)

Rate: 398 pps/4281 kbps(1sec), 3529 kbps(last 20 secs), 191 kbps(life avg)

RT5#sh ip mroute

IP Multicast Routing Table

Flags: D – Dense, S – Sparse, B – Bidir Group, s – SSM Group, C – Connected,

L – Local, P – Pruned, R – RP-bit set, F – Register flag,

T – SPT-bit set, J – Join SPT, M – MSDP created entry,

X – Proxy Join Timer Running, A – Candidate for MSDP Advertisement,

U – URD, I – Received Source Specific Host Report, Z – Multicast Tunnel

Y – Joined MDT-data group, y – Sending to MDT-data group

Outgoing interface flags: H – Hardware switched, A – Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.255.1.1), 00:34:55/stopped, RP 0.0.0.0, flags: D

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/0, Forward/Dense, 00:34:55/00:00:00

Ethernet0/0, Forward/Dense, 00:34:25/00:00:00

Ethernet0/0.104, Forward/Dense, 00:34:55/00:00:00

(192.168.1.1, 239.255.1.1), 00:06:11/00:02:50, flags: T

Incoming interface: Ethernet0/0, RPF nbr 0.0.0.0 <<<<< All this interfaces

Outgoing interface list: will get it, unless

Ethernet0/0.104, Prune/Dense, 00:00:06/00:02:53 <<<< Prune all routers starts to

Serial0/0, Forward/Dense, 00:06:11/00:00:00 prune……

RT5#sh ip mroute count

IP Multicast Statistics

6 routes using 5316 bytes of memory

5 groups, 0.20 average sources per group

Forwarding Counts: Pkt Count/Pkts per second/Avg Pkt Size/Kilobits per second

Other counts: Total/RPF failed/Other drops(OIF-null, rate-limit etc)

Group: 239.255.1.1, Source count: 1, Packets forwarded: 16756, Packets received: 17027

Source: 192.168.1.1/32, Forwarding: 16756/17/1343/191, Other: 17027/271/0

RT5#sh ip mroute count

IP Multicast Statistics

6 routes using 5316 bytes of memory

5 groups, 0.20 average sources per group

Forwarding Counts: Pkt Count/Pkts per second/Avg Pkt Size/Kilobits per second

Other counts: Total/RPF failed/Other drops(OIF-null, rate-limit etc)

Group: 239.255.1.1, Source count: 1, Packets forwarded: 16764, Packets received: 17035

Source: 192.168.1.1/32, Forwarding: 16764/17/1343/191, Other: 17035/271/0

On RT6:

RT6#sh ip mcache

IP Multicast Fast-Switching Cache

(192.168.1.1/32, 239.255.1.1), Serial0/0.1, Last used: 00:00:00, MinMTU: 1500

Ethernet0/0.60 MAC Header: 01005E7F01010005325D44608100003C0800

RT6#sh ip igmp groups

IGMP Connected Group Membership

Group Address Interface Uptime Expires Last Reporter

239.255.1.1 Ethernet0/0.60 00:10:04 00:02:26 10.1.60.100

RT6#sh ip igmp membership

Flags: A – aggregate, T – tracked

L – Local, S – static, V – virtual, R – Reported through v3

I – v3lite, U – Urd, M – SSM (S,G) channel

1,2,3 – The version of IGMP the group is in

Channel/Group-Flags:

/ – Filtering entry (Exclude mode (S,G), Include mode (*,G))

Reporter:

<mac-or-ip-address> – last reporter if group is not explicitly tracked

<n>/<m> – <n> reporter in include mode, <m> reporter in exclude

Channel/Group Reporter Uptime Exp. Flags Interface

*,239.255.1.1 10.1.60.100 00:10:08 02:22 2A Et0/0.60

RT6#sh ip igmp interface s0/0.1

Serial0/0.1 is up, line protocol is up

Internet address is 138.1.11.156/27

IGMP is enabled on interface

Current IGMP host version is 2

Current IGMP router version is 2

IGMP query interval is 60 seconds

IGMP querier timeout is 120 seconds

IGMP max query response time is 10 seconds

Last member query count is 2

Last member query response interval is 1000 ms

Inbound IGMP access group is not set

IGMP activity: 1 joins, 0 leaves

Multicast routing is enabled on interface

Multicast TTL threshold is 0

Multicast designated router (DR) is 138.1.11.156 (this system) <<<<< Another way

IGMP querying router is 138.1.11.130 <<<<< Quering to know who

No multicast groups joined by this system router is is the DR.

instead the DR is the

lowest !!!! Highest !!!!

PIM Neighbor Table

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

10.1.35.8 Ethernet0/0.2 02:47:29/00:01:36 v2 1 / DR S

140.1.2.1 Ethernet0/0.102 02:48:50/00:01:15 v2 1 / S

138.1.56.5 Ethernet0/0.104 02:49:04/00:01:40 v2 1 / S

138.1.11.130 Serial0/0.1 02:49:28/00:01:34 v2 1 / S

138.1.11.155 Serial0/0.1 02:49:28/00:01:36 v2 1 / S

138.1.15.11 Serial0/0.2 02:24:56/00:01:37 v2 1 / S

RT6#sh ip pim interface

Address Interface Ver/ Nbr Query DR DR

Mode Count Intvl Prior

10.1.35.6 Ethernet0/0.2 v2/D 1 30 1 10.1.35.8

10.1.60.6 Ethernet0/0.60 v2/D 0 30 1 10.1.60.6

150.50.7.5 Ethernet0/0.102 v2/D 1 30 1 150.50.7.5

138.1.56.6 Ethernet0/0.104 v2/D 1 30 1 138.1.56.6

138.1.11.156 Serial0/0.1 v2/D 2 30 1 138.1.11.156

138.1.15.10 Serial0/0.2 v2/D 1 30 1 0.0.0.0

RT6#sh ip mroute active

Active IP Multicast Sources – sending >= 4 kbps

Group: 239.255.1.1, (?)

Source: 192.168.1.1 (?)

Rate: 17 pps/191 kbps(1sec), 191 kbps(last 50 secs), 91 kbps(life avg)

RT6#sh ip mroute

IP Multicast Routing Table

Flags: D – Dense, S – Sparse, B – Bidir Group, s – SSM Group, C – Connected,

L – Local, P – Pruned, R – RP-bit set, F – Register flag,

T – SPT-bit set, J – Join SPT, M – MSDP created entry,

X – Proxy Join Timer Running, A – Candidate for MSDP Advertisement,

U – URD, I – Received Source Specific Host Report, Z – Multicast Tunnel

Y – Joined MDT-data group, y – Sending to MDT-data group

Outgoing interface flags: H – Hardware switched, A – Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.255.1.1), 01:01:42/stopped, RP 0.0.0.0, flags: DC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/0.60, Forward/Dense, 00:23:21/00:00:00 <<<<<<< This is the problem:

Serial0/0.2, Forward/Dense, 01:01:42/00:00:00 Lot’s of interfaces

Serial0/0.1, Forward/Dense, 01:01:42/00:00:00 to keep track of.

Ethernet0/0.104, Forward/Dense, 01:01:42/00:00:00

Ethernet0/0.102, Forward/Dense, 01:01:42/00:00:00

Ethernet0/0.2, Forward/Dense, 01:01:42/00:00:00

(192.168.1.1, 239.255.1.1), 00:19:12/00:02:59, flags: T

Incoming interface: Serial0/0.1, RPF nbr 138.1.11.155, Mroute

Outgoing interface list:

Ethernet0/0.2, Forward/Dense, 00:00:29/00:00:00 <<<<<<<<< lots of interfaces…

Ethernet0/0.102, Prune/Dense, 00:00:36/00:02:23, A

Ethernet0/0.104, Prune/Dense, 00:06:46/00:02:17, A

Serial0/0.2, Prune/Dense, 00:03:46/00:02:19

Ethernet0/0.60, Forward/Dense, 00:19:12/00:00:00

RT6#sh ip mroute count

IP Multicast Statistics

4 routes using 5170 bytes of memory

3 groups, 0.33 average sources per group

Forwarding Counts: Pkt Count/Pkts per second/Avg Pkt Size/Kilobits per second

Other counts: Total/RPF failed/Other drops(OIF-null, rate-limit etc)

Group: 239.255.1.1, Source count: 1, Packets forwarded: 15754, Packets received: 15775

Source: 192.168.1.1/32, Forwarding: 15754/17/1343/191, Other: 15775/21/0

RT6#sh ip mroute count

IP Multicast Statistics

4 routes using 5170 bytes of memory

3 groups, 0.33 average sources per group

Forwarding Counts: Pkt Count/Pkts per second/Avg Pkt Size/Kilobits per second

Other counts: Total/RPF failed/Other drops(OIF-null, rate-limit etc)

Group: 239.255.1.1, Source count: 1, Packets forwarded: 15773, Packets received: 15794

Source: 192.168.1.1/32, Forwarding: 15773/17/1343/191, Other: 15794/21/0

=> Just observe the number of interfaces and pruning needed, Very inefficient!!!!